SIP Server

Contents

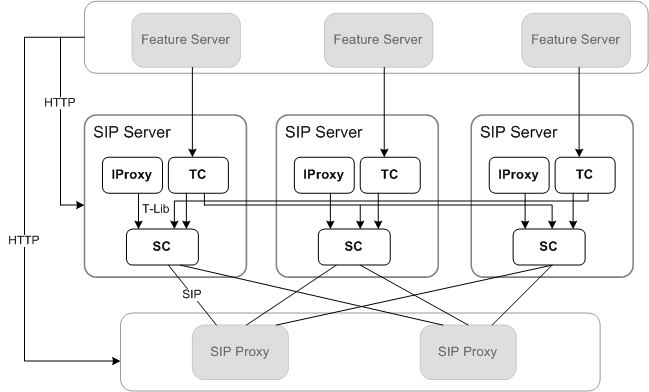

With SIP Server in cluster mode, you can create a highly scalable architecture in which the system's capacity can be scaled up or down with minimal configuration changes. You can add new instances of SIP Server to the cluster at any time to increase its capacity. You can also reduce the cluster size when you need to, by removing any unnecessary nodes.

SIP Server in cluster mode is designed to support high call volumes over a large number of SIP phones and T-Library desktops. SIP Server in cluster mode is also able to support an increased number of Genesys reporting and routing components, in which large volumes of data are produced.

When working in cluster mode, SIP Server uses the following three internal modules to provide cluster functionality:

- Session Controller—call processing engine

- T-Controller—T-Library interface for agent desktops and Genesys servers, which monitor agent- and DN-related T-Events

- Interaction Proxy—T-Library interface that distributes interactions across the clients in a pool

Important: All three modules operate within one executable file.

Session Controller

Stateless call processing engine

Session Controller (SC) is an independent call processing engine. SC operation is comparable to how SIP Server works in stand-alone mode. The main difference is that SC does not store any information about the state of call center peers—DNs or agents. SC obtains all information required to process the call from the T-Controller (TC) module and the SIP Feature Server.

Call ownership

To process a higher call volume, the cluster is scaled up by adding new instances of SIP Server. The cluster is designed to have all calls distributed across all existing SC's uniformly. This distribution is applied to both calls that are initiated through the T-Library interface (three-person conference calls) and calls that are originated through the SIP protocol (one-person conference calls). Each call is processed by one SC. All manipulations required for this call, such as transfers and conferences, are performed on this SC. A call is never transferred from one SC in the cluster to another. The cluster architecture ensures that the same SC processes all related calls, such as main and consultation calls initiated from the same DN. As a result, all regular treatments, such as completing a transfer or conference, can be applied to a call.

Limitation on processing calls from different SCs on one DN:

This call processing model creates a limitation in processing independent calls that are delivered to the same DN from different SCs. For example, two customers call the same agent simultaneously and the two inbound calls are delivered for processing to two different SCs. An agent can accept both calls, but cannot switch between the calls or merge them using an agent desktop. Those operations must be performed directly from a SIP phone.

T-Events distribution

SIP Server in cluster mode provides all functionality that is available in a stand-alone SIP Server in terms of call processing and call representation through the T-Library interface. In a cluster architecture, all T-Library clients connect to SIP Server through its cluster interfaces—T-Controller (TC) and Interaction Proxy (IProxy). The only exception to this rule is Universal Routing Server (URS), which connects directly to the default SC listening port. SC generates the standard sequence of T-Events for each call it processes and distributes those events through TC and IProxy interfaces to the T-Library clients.

T-Controller

Scalability on the number of agents

Stand-alone SIP Server deployments are limited by the number of agents that can connect to one instance of SIP Server. Cluster architecture resolves this problem by providing a TC interface layer. This layer consists of TC modules of all SIP Server instances connected to each other. Each TC maintains the states of an equal share of all devices operating in the cluster. If an agent is logged in to a device, the agent's state is stored in the same TC. This approach enables the distribution of the processing load related to the maintenance of agent and DN states across all TCs in the cluster. The TC layer can be scaled up or down by adding or removing SIP Server instances to or from the cluster. Redistribution of DNs across the new number of instances of TC's is performed automatically and does not require any reconfiguration.

Agent desktops

Agent desktops connect to the cluster TC layer. The architecture described above does not require agent desktops to connect to the TC where the state of the corresponding device is maintained. The Agent desktop can connect to any TC in the cluster. In any case, the TC layer infrastructure guarantees that all T-Events generated for the DN this desktop is registered for will be delivered to the desktop. Even though it is possible for the desktop to connect to any TC in the cluster, the best performance is achieved if the desktop connects to the TC, which maintains the state of the device desktop is registering for. The TC layer implements a protocol to inform the client about the location of the TC where a client should connect to. Clients, which support this protocol, are supposed to disconnect from the current TC and reconnect to the other TC using the address received in the T-Event. Genesys Interaction Workspace supports this functionality. More information about how agent desktops are configured to connect to the cluster can be found in the following section:

Bulk registrants

Bulk registrants are the T-Library clients, which have to monitor all DNs owned by a particular TC. Reporting and routing Genesys components, such as Stat Server and ICON, monitor all DNs in the whole environment. The cluster architecture suggests that those clients connect to all instances of TCs and register for all DNs owned by this TC.

To simplify the registration procedure for bulk registrants and to optimize network traffic, bulk registration is performed with one request, which has to be submitted to the TC: TPrivateService (AttributePrivateMsgId=8197).

The TC distributes T-Events for all DNs that it owns to a respective client when this request is processed. The client can select the events it needs to receive through this connection and to filter out unnecessary UserData using InputMask and UdataFilter extensions correspondingly.

The state of each active DN in the TC is reported to the bulk-registrant client when it registers. The TC generates one EventPrivateInfo (AttributePrivateMessageId=8197) per DN. Each message contains the same information as EventAddressInfo and it also carries some additional parameters.

Interaction Proxy

Scalability of call-monitoring clients

Interaction Proxy (IProxy) is a new T-Library interface of SIP Server, which is activated when SIP Server is operating in cluster mode. This interface distributes the call-related T-Events across a pool of T-Library clients. Examples of such clients are ICON and Stat Server. Those clients need to receive information about all calls handled in the system. However, one client often cannot handle the call load that is processed by one SIP Server. The IProxy interface allows several clients of the same type (for example, multiple Stat Server instances) to identify themselves as one pool. It makes the IProxy to balance the call load across the clients in one pool uniformly. In this case, each client handles only a fraction of the load. The IProxy interface allows to scale up the number of T-Library clients, which monitor the calls processed in SIP Server working as a cluster node.

Distributing interactions

The IProxy interface works with interactions. One interaction may contain one or multiple calls. For example, one interaction contains both primary and consultation calls. The IProxy distributes interactions to its clients. It means that all T-Events related to all calls, which belong to the same interaction, are sent to the same client.

Each client in the pool handles a unique set of interactions comparing to other clients in the same pool, but the set of interactions that is sent to different pools are identical. For example, if there is a pool of ICONs and another pool of Stat Servers connected to the same IProxy interface, then both pools will have the identical set of interactions reported.

Client connections to IProxy

The IProxy client uses a TPrivateService request (8192) to identify itself as a member of a new or existing pool. This request must carry extensions ClusterId and NodeId, where ClusterId should be the same for all members of the same pool and NodeId should have a unique value for each pool member. This information allows the IProxy to add a new member to a pool and to start sending an equal share of interactions to it. A cluster client registration request can also carry a UdataFilter extension, which contains a list of events this client wants to subscribe for.

The IProxy sends information about all active interactions to a client that registers for a new pool, that is this client is the first in the pool. Information is distributed in a form of a snapshot, which consists of call monitoring events EventCallCreated and EventPartyAdded. This information allows the IProxy client to build a valid view of active interactions. The snapshot is sent only to the first client in a pool.

Reliability of client connections to IProxy

The IProxy client must provide the SessionId extension in its registration request sent to the IProxy. The value of this extension is generated by a client and it is used by both the client and IProxy to identify a session established for this client. The session is used to make temporary network disconnections seamless for the IProxy clients. The IProxy starts accumulating events when the client disconnects. If the client reconnects back to the IProxy in a short period of time and provides the SessionId that was used for the original session, the IProxy sends all T-Events accumulated while the client was disconnected to this client. That way no events are lost for the client as a result of the network disconnection.

SIP Server Switch in the Cluster

Simplified switch configuration

SIP Server switch used in cluster doesn't contain DNs of type Extensions and ACD Positions. Agent Logins also are not configured in the switch. This is a major difference between the cluster and stand-alone SIP Server switch configuration. It greatly simplifies switch provisioning and also improves scalability of the whole solution. Switch provisioning is significantly simplified in comparison with the stand-alone configuration where it is required not only to create two objects (DN and Agent Login) for each agent but also to configure a number of parameters for each of those objects. In the cluster, information about agent DNs is stored in the Feature Server and Agent Login objects are not used at all.

Solution scalability improvement

Light configuration of the cluster switch improves the scalability of the whole Genesys solution. Large number of agent-related objects configured under the Switch object in the configuration environment impacts the performance of Genesys components, and affects the solution scalability in a whole. SIP Server, as well as some other Genesys components, is designed to read all DNs configured in the configuration environment at startup. The more DNs are configured the longer it takes for a component to read all configuration and get ready for call processing. In particular, it affects the recovery time after the component failure. Reading the large number of DNs at startup may also flood the network and impact the service quality for active calls.

Device Profiles

The other level of cluster switch provisioning simplification is that here is no need to configure any DN-level parameters even in the Feature Server where the DNs are stored. New approach of device provisioning is introduced in the cluster. DN-level parameters are configured in the device profiles, which are represented as VoIP Service DNs. Each profile is used for a group of agent devices. SC is able to associate a proper device profile with any device participated in a call, automatically, based on the value of the User-Agent header received in the SIP messages from the device.

DN State Maintenance

DN state life cycle

The TC layer is responsible for maintaining the states of the DNs, which are currently operating in the cluster. See the high-level description of the TC functionality above: T-Controller.

At startup, the TC layer does not have any information about the DNs in the cluster. The TC layer starts the maintaining DN state when either the SIP phone or T-Library client registers for a corresponding DN.

The TC layer deletes the DN state if two conditions are met:

- SIP registration for this DN expires (or does not exist).

- No T-Library clients are registered for this device.

SIP registration handling

In cluster mode, SIP Server does not work as a SIP Registrar but SIP Proxy still passes all SIP REGISTER messages to SIP Server for the following purposes:

- SIP request authentication—SIP cluster may be configured to authenticate SIP REGISTER requests.

- DN state maintenance—TC-layer puts the device in or out of service based on the information in the Expire header. It also keeps the registration timer. The TC-layer sets a device to out-of-service state if SIP registration is not renewed before the expiration.

- Device profile linkage—TC-layer uses User-Agent information to associate a device profile with the registered device. See information about device profiles: Device Profiles (above or below?).

TRegisterAddress handling

There are two distinct scenarios of the TRegisterAddress processing:

- The request is received when a corresponding DN state is already registered in the TC-layer.

- The request is received when the TC-layer has no knowledge about the requested DN.

In the first scenario, the TC-layer responds with the standard EventRegistered, which has the same content as the one in the stand-alone mode.

The second scenario is more sophisticated as the TC does not know if the requested device is a valid internal DN or not. This information is stored at the Feature Server. The TC-layer first sends a query to the Feature Server to check the DN validity. On the positive response EventRegisterd is distributed as in the scenario above. In the case if the Feature Server reports the DN as unknown, EventError is returned to the client in response to the TRegisterAddress.

If the DN state is registered in the TC-layer as a result of TRegisterAddress, then this device is set to out-of-service state because it does not have SIP registration yet.

Cluster Node Awareness Protocol

At startup, a SIP Server Node (or a SIP Server HA pair) becomes aware of other SIP Server Nodes in the cluster. It automatically attempts to connect to T-Controllers of other nodes in the cluster. Upon a successful connection, the SIP Server Node issues a “retrieve node states” TPrivateService request and obtains a list of all running (Active or Inactive) SIP Server Nodes. Other SIP Server Nodes in the cluster become aware of the new node as they accept its connection requests. In their turn they connect to the new node as well. The SIP Server Node waits for certain time to allow bulk registrants (Stat Server, ICON, Feature Server) and URS to connect before call processing is started. If no node conflicts are detected, the SIP Server Node initiates self activation by sending designated TPrivateService requests to other nodes.

A SIP Server Node that is running and has joined the cluster can be removed from the cluster if both SIP Server applications in the HA pair are shut down using Genesys Framework. If a SIP Server Node that is running and has joined the cluster suddenly fails, other SIP Server Nodes become aware of the failure.

DN namespace is mapped to the SIP Server Nodes, so that there is a specific node owning a DN for a given DN name and set of SIP Server Nodes. On a switchover, DN namespace mapping for the failed primary SIP Server is preserved for a new primary SIP Server.

SIP Server States

SIP Server Nodes can be in one of the following states:

- Shutdown—The state of a SIP Server application when its host machine is down or it does not have a network connection. Alternatively, the machine and network are functioning properly but the SIP Server application is not running or malfunctioning.

- Active—The state of a SIP Server Node when it is actively participating in the cluster and handles its share of calls.

- Inactive—The state of a SIP Server Node when it is running but it does not handle calls. The Node does not own any DN states. The node replies to the OPTIONS request with a 503 message instead of the OK. SIP Proxy does not direct new calls to the node.

State Transitions

Cluster administrator can do the following:

- Add, remove, or change the Cluster node description in the configuration database.

- Start or shut down Cluster applications.

During startup, SIP Server applications are going from Shutdown to Inactive state where the following processes occur:

- SIP Server application is started from SCI or GA.

- It reads configured applications from the Cluster Configuration DN.

- It connects to all running SIP Server T-Controller ports (Active and Inactive).

- All SIP Server Nodes update their views with a new Inactive SIP Server Node.

If a number of connected SIP Server nodes is equal or greater than the min-node-count (default is 4), then SIP Server waits for the period of time specified in node-awareness-startup-timeout (default is 5 sec) to allow Stat Server, ICON, Feature Server, and URS to connect to it. Then SIP Server activates itself by triggerring the Plus One procedure (described below) internally. If the min-node-count limit is not reached, SIP Server stays in Inactive state.

During shutdown, SIP Server applications are going from Inactive to Shutdown state where the following processes occur:

- SIP Server application is stopped from SCI or GA.

- SIP Server Node is shut down.

- All SIP Server Nodes detect that SIP Server Node no longer exists through the ADDP protocol.

- All SIP Server Nodes update their views removing the missing SIP Server Node.

When adding a new SIP Server Node, SIP Server applications are going from Inactive to Active state where the following processes occur:

- A new SIP Server Node sends a TPrivateService("Plus One") request to all running SIP Server Nodes.

- Active SIP Server Nodes send a share of their DNs to a new SIP Server Node.

- The new SIP Server Node accepts and processes all DN states.

- The new SIP Server Node starts replying to SIP OPTIONS requests from SIP Proxy with a 200 OK response.

- Inactive SIP Server Nodes monitor the progress, mark the new SIP Server Node as Active upon completion.

As a result, the new SIP Server Node is marked as Active in the cluster view on all SIP Server nodes. SIP Proxy starts distributing 1pcc calls to the new SIP Server Node. The new SIP Server Node starts processing TMakeCall requests for its DNs.

When shutting down a SIP Server Node, SIP Server applications are going from Active to Inactive state where the following processes occur:

- SIP Server Node sends a TPrivateService("Minus One") to all running SIP Server Nodes.

- SIP Server Node being removed transfers all DN states to other SIP Server Nodes and starts replying to SIP OPTIONS requests from SIP Proxy with a 503 response.

- Active SIP Server Nodes receive and process new DN states.

- Inactive SIP Server Nodes monitor the progress, mark the removed node as Inactive upon completion.

As a result, the deactivated SIP Server Node is marked as Inactive in the cluster view on all SIP Server Nodes. All DN states from the deactivated node are equally distributed between Active SIP Server Nodes. SIP Proxy does not distribute 1pcc calls to the deactivated node. The deactivated SIP Server Node does not process any TMakeCall requests.

Transferring DN state

Cluster-aware agent desktops are notified when DN ownership is changed. Notification (EventPrivateInfo) will contain the address of the new SIP Server T-Controller DN-owner. Bulk registrants connected to the old T-Controller DN-owner receive a message which DNs are removed. Bulk registrants connected to the new T-Controller DN-owner, receive a message which DNs are added. Agents are required to re-log in if DN ownership is changed. Cluster-aware desktops do that based on the notification, or T-Controller waits for the period of time specified in node-awareness-agent-timeout and then forcefully logs agents out.

Call Handling in a Cluster Environment

Differentiation of internal and external DNs

SIP Server working in cluster mode needs to differentiate internal DNs and external numbers. It is required to provide backward compatibility on T-Library level, i.e. T-Events are to be generated only for the internal DNs and not for the external numbers. Cluster switch doesn't have this information anymore. According to the cluster architecture the whole list of internal DN should be stored at the FS. SIP Server is obtains this information through the dial plan requests, which are send to FS where dial plan is implemented for each call SIP Server is processing. FS returns back the type of the origination and destination devices.

Selecting Device Profile for a DN

Device Profile is selected for a DN automatically by matching the value of 'User-Agent' header received from the corresponding SIP phone with the value of 'profile-id' parameter of one of the VoIP Service DNs of type 'device-profile'. Value of the 'profile-id' parameter should be a substring of the value of the 'User-Agent' header. Comparison is case sensitive. Values of 'profile-id' parameters should be unique for all device profiles defined in the cluster switch for the device profile selection procedure to work properly. SIP Server is able to process 'User-Agent' header received in the SIP REGISTER message. In this case the value of this header is stored as a part of the device state in the TC layer and is used when 3pcc call is initiated from this device. 'User-Agent' header received in one of the SIP messages during the call is also used for device profile selection. If matching device profile is not found based on the received 'User-Agent' or 'User-Agent' header was not received in any of the SIP messages generated by the SIP phone, then default device profile is used. Default device profile is the one, which has 'profile-id' set to the value of 'default-profile'. Once device profile is selected all DN-level parameters defined in this profile are applied to a device used for processing current call. Device profile selection procedure is performed each time when gets involved in a call. If device profile is not found based on 'User-Agent' header and default device profile is not defined, then device with all its parameters set to default values is used for call processing. Mechanism of device profiles is only applicable to the agent devices, i.e. SIP phones. Device profiles are not used for Trunks, Trunk Groups, Media Servers and etc. In the latter case all DN-level parameters are configured explicitly under this device in the cluster switch the same way as it is done in stand-alone SIP Server mode.

Selecting device profile for different call types

Device profile is selected based on the User-Agent header value received in one of the SIP messages from the device. If this information is not available, then in all the following scenarios, the default device profile is used, or a device is created with all default parameters.

| Scenario | Description |

|---|---|

| Destination device in any call scenario | Original outgoing INVITE sent to a call destination is always created using default-profile. If the device supplies the User-Agent header in the response to the outgoing INVITE sent by the SIP Server, then this information is used to select the device profile for the device and to replace the existing one. |

| Origination device in 1pcc inbound call | Device profile is assigned based on the User-Agent header received in the INVITE message from the originating the call. |

| Origination device of TMakeCall | If the User-Agent header is submitted in the SIP REGISTER request received from this device, then the matching device profile is used. |

| Origination device of TInitiateCall/TInitiateConference | The consultation call origination device uses the same device profile as the one used for this device in the main call. |

Origination Device type detection

To ensure proper origination device type detection, the second top most Via header in the INVITE requests received from the agent's phones must not match a contact value of any of the trunks configured in the Configuration Layer.

In a cluster environment, SIP Server detects an origination device based on the second Via header of a new incoming INVITE request. If the host of the second Via header matches one of the trunks configured in Configuration Layer, then the device is assumed to be external. Otherwise, the origination device is positioned as internal and all necessary T-Events are generated for this device in a call. In a scenario where a call is made to a Routing Point, SIP Server does not consult the Dial Plan to process the incoming call (performance improvement measure). Instead, it relies on automatic detection of the origination device type. If the destination device is not a Routing Point, then SIP Server queries the Dial Plan. The Dial Plan response contains the types of both origination and destination devices. So, no assumptions are made about device types.

Related Documentation Resources

The following documentation resources provide general information about SIP Server: