Contents

Setting up the Load Balancer in a Multi-tenant Environment

See Also: Setting up the Load Balancer in a Single Tenant Environment

Overview and Architecture

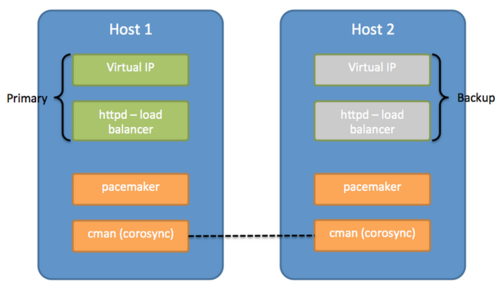

The solution uses a common Linux HA framework from http://clusterlabs.org. There are two components involved in this solution:

- Cman uses corosync internally to provide a platform for membership, messaging, and quorum among the hosts.

- Pacemaker is a cluster resource manager that controls where resources (processes) are executed. Pacemaker works with the processes like Apache httpd using resource agents to provide controls of the process such as start/stop/status.

The following diagram shows a primary/backup design to associate a single virtual IP address with httpd. Whenever the primary host fails, the virtual IP address and the httpd process can be automatically fail over to the backup host.

As a simple two host primary/backup solution, the hosts must be deployed on the same subnet that allows UDP multicast. This solution provides the same reliability as a network that hosts the two machines handling the virtual IP address.

Deploying the Load Balancer

Prerequisites

- Red Hat Enterprise Linux 6.

- OR

- Red Hat Enterprise Linux 7, version 7.2 or higher

Installing the OS

Install the required software using the following command:

yum -y install httpd pacemaker cman pcs ccs resource-agents

Setting up the HTTP Load Balancer

Please note that any URL setup for the various GIR components described in the Multi-Tenant Deployment should now point to the respective loadbalancer URLs, e.g.

- RPS URL: <loadbalancer URL>/t1/rp/api

- htcc.baseurl should point to the RWS loadbalancer URL: <loadbalancer URL>/t1

- rcs.base_uri should point to <loadbalancer URL>/t1/rcs

On both servers, create the following files:

- Create /etc/httpd/conf.d/serverstatus.conf, and add the following text:

<Location /server-status> SetHandler server-status Order deny,allow Deny from all Allow from 127.0.0.1 </Location>

For each tenant, create a separate /etc/httpd/conf.d/loadbalancer_tenantN.conf file. Use the Include directive within the main /etc/httpd/conf.d/httpd.conf to include each tenant configuration:

Include /etc/httpd/conf.d/loadbalancer_tenantN.conf

In addition, provide each tenant with a separate balancer rule, ProxyPass and the following URI conventions:

- Interaction Recording Web Services

- http://loadbalancer/t1/api

- http://loadbalancer/t1/internal-api

- Recording Processor

- http://loadbalancer/t1/rp

- Recording Crypto Server

- http://loadbalancer/t1/rcs

- Interaction Receiver

- http://loadbalancer/t1/interactionreceiver

- WebDAV Server

- http://loadbalancer/t1/webdav

- For each tenant, create /etc/httpd/conf.d/loadbalancer_tenantN.conf, and add the following text:

The following lines starting with BalancerMember refer to the URL to the servers for Interaction Recording Web Services, Recording Processor, Recording Crypto Server, Interaction Receiver, and WebDAV server.

loadbalancer_tenantN.conf

# Interaction Recording Web Services for tenant 1

<Proxy balancer://t1rws>

BalancerMember http://t1rws1:8080 route=T1RWS1

BalancerMember http://t1rws2:8080 route=T1RWS2

BalancerMember http://t1rws3:8080 route=T1RWS3

Header add Set-Cookie "ROUTEID=.%{BALANCER_WORKER_ROUTE}e; path=/" env=BALANCER_ROUTE_CHANGED

ProxySet stickysession=ROUTEID

</Proxy>

ProxyPass /t1/api balancer://t1rws/api

ProxyPass /t1/internal-api balancer://t1rws/internal-api

# RP for tenant 1

<Proxy balancer://t1rp>

BalancerMember http://t1rp1:8889

BalancerMember http://t1rp2:8889

</Proxy>

ProxyPass /t1/rp/api balancer://t1rp/api

# RCS for tenant 1

<Proxy balancer://t1rcs>

BalancerMember http://t1rcs1:8008 connectiontimeout=10000ms route=T1RCS1

BalancerMember http://t1rcs2:8008 connectiontimeout=10000ms route=T1RCS2

Header add Set-Cookie "RCSROUTEID=.%{BALANCER_WORKER_ROUTE}e; path=/" env=BALANCER_ROUTE_CHANGED

ProxySet stickysession=RCSROUTEID

</Proxy>

ProxyPass /t1/rcs balancer://t1rcs/rcs

ProxyPassReverseCookiePath "/rcs" "/t1/rcs"

# Interaction Receiver for tenant 1

<Proxy balancer://t1sm>

BalancerMember http://t1ir1

BalancerMember http://t1ir2

</Proxy>

ProxyPass /t1/interactionreceiver balancer://t1sm/interactionreceiver

# WebDAV for tenant 1

<Proxy balancer://t1webdav>

BalancerMember http://t1webdav1

BalancerMember http://t1webdav2 status=H

</Proxy>

ProxyPass /t1/webdav/recordings balancer://t1webdav/recordings

ProxyPass /t1/webdav/dest2 balancer://t1webdav/dest2

Setting Up Pacemaker and Cman

Disable Autostart for Httpd

Pacemaker manages the startup of httpd. Disable httpd from chkconfig services using the following command:

chkconfig httpd off

Setting Up the Hosts File

Make sure there is a hostname for both servers and that the hostname is resolvable on both hosts, either using DNS or /etc/hosts file. ip1 and ip2 are used as the hostnames thereafter.

# /etc/hosts # ... keep the existing lines, and only append new lines below 192.168.33.18 ip1 192.168.33.19 ip2

Setting Up the Cluster

Run the following command on each host to create the cluster configuration:

ccs -f /etc/cluster/cluster.conf --createcluster webcluster ccs -f /etc/cluster/cluster.conf --addnode ip1 ccs -f /etc/cluster/cluster.conf --addnode ip2 ccs -f /etc/cluster/cluster.conf --addfencedev pcmk agent=fence_pcmk ccs -f /etc/cluster/cluster.conf --addmethod pcmk-redirect ip1 ccs -f /etc/cluster/cluster.conf --addmethod pcmk-redirect ip2 ccs -f /etc/cluster/cluster.conf --addfenceinst pcmk ip1 pcmk-redirect port=ip1 ccs -f /etc/cluster/cluster.conf --addfenceinst pcmk ip2 pcmk-redirect port=ip2 ccs -f /etc/cluster/cluster.conf --setcman two_node=1 expected_votes=1 echo "CMAN_QUORUM_TIMEOUT=0" >> /etc/sysconfig/cman

Start the Service

Start the cman and pacemaker services on each host using the following command:

service cman start service pacemaker start chkconfig --level 345 cman on chkconfig --level 345 pacemaker on

(Optional) Setting Up UDP Unicast

This solution relies on UDP multicast to work, but can also work with UDP unicast. Edit the /etc/cluster/cluster.conf file and insert an attribute to the <cman> tag as follows:

... <cman transport="udpu" two_node="1" expected_votes="1/> ...

Restart both servers for the changes to take effect.

Setting Cluster Defaults

Run the following on one of the servers.

pcs property set stonith-enabled=false pcs property set no-quorum-policy=ignore pcs resource defaults migration-threshold=1

Configure the Virtual IP Address and Apache httpd

Run the following on one of the servers.

For the first command below, nic=eth0 refers to the network interface that brings up the virtual IP address. Change eth0 to the active network interface your environment uses.

Change <Virtual IP> in the first command below to your virtual IP assigned to this load balancer pair.

pcs resource create virtual_ip ocf:heartbeat:IPaddr2 ip=<Virtual IP> nic=eth0 cidr_netmask=32 op monitor interval=30s pcs resource create webserver ocf:heartbeat:apache configfile=/etc/httpd/conf/httpd.conf statusurl="http://localhost/server-status" op monitor interval=30s pcs resource meta webserver migration-threshold=10 pcs constraint colocation add webserver virtual_ip INFINITY pcs constraint order virtual_ip then webserver

Maintaining Pacemaker

The following commands help you with the maintenance operations for pacemaker.

To check the status of the cluster:

- pcs status

To clear resource errors (for example, because of incorrect configuration):

- pcs resource cleanup <resourcename>. A resource name is either virtual_ip or web server (for example, pcs resource cleanup webserver).

To check the status of the resources in the cluster:

- crm_mon -o -1