New Features by Release

New in Hot Fix 8.5.200.12

GRE Memory Monitor

The Memory Monitor is a new feature built in to GRE itself. It is designed to periodically check GRE's memory usage, and set its operational state to "over threshold" if memory usage exceeds the configured threshold. The state is reset to back to "normal" if memory usage comes back below the threshold. It also provides an option ("adaptive" strategy) to automatically adjust the configured threshold if an out-of-memory error occurs before threshold is reached (for example, if the configured threshold was set too high).

The memory monitor sets this state in two places:

- status.jsp—This .jsp provides a "health check" URL for load balancers to use. If the memory usage is above the configured threshold, status.jsp returns SYSTEM_STATUS_MEMORY_USAGE_ABOVE_THRESHOLD (HTTP 503 status). Load balancers should be configured to route requests only to GRE nodes whose status.jsp returns SYSTEM_STATUS_OK (HTTP 200 status).

- Genesys Management Layer—The Memory Monitor will also notify Genesys Management Layer if the memory is in an overloaded state by setting the status to SERVICE_UNAVAILABLE.

NEW CONFIGURATION OPTIONS.

New in Release 8.5.2

GRS Rules Authoring REST API

In this release, API functions to manage Rule Packages, Rules, Business Calendars, Snapshots and Deployment are provided. Applications or custom user interfaces (which can run in parallel to or instead of the Genesys Rules Authoring Tool web application) can use this API to perform rule authoring and deployment. Please see the GRS REST/API Reference Guide.

Important

The new GRS REST/API Reference Guide supersedes the previous REST/API section in previous versions of this document, which are now removed.

Cluster Deployment Improvements for Cloud

In this release, partial deployments and auto-synchronization of rules packages between cluster members are now possible.

[+] MORE

Background

Before release 8.5.2, successful deployment to a GRE cluster required a successful deployment to every node in the cluster, otherwise the deployment was rolled back and none of the nodes was updated.

What's New?

- Partial deployments—The deployment process can now handle scenarios in which nodes are down or disconnected. GRAT continues deploying directly to the clustered GREs, but now the deployment continues even if it fails on one (or more) of the cluster nodes. A new deployment status—Partial—will be used for such deployments. Users will see the failed/successful deployment status for each node by clicking on the status in GRAT deployment history.

[+] CONFIG OPTION

- allow-partial-cluster-deployment

- Default value—false

- Valid Values—true, false

- Change Takes Effect—Immediately

- If set to true it enables GRAT to peform a partial deployment for a cluster, otherwise the old behavior (false) of failing the entire cluster deployment if any single node fails.

- A new "smart cluster" Application template—A new Application template—GRE_Rules_Engine_Application_Cluster_<version>.apd—is implemented to support the new functionality. To configure a cluster with the new features, use this template. Members of the cluster must be of the same type (Genesys Rules Engine applications—the new features are not applicable to Web Engagement engines) and must have minimum version numbers of 8.5.2. Genesys recommends not creating clusters of GREs with mixed 8.5.1/8.5.2 versions.

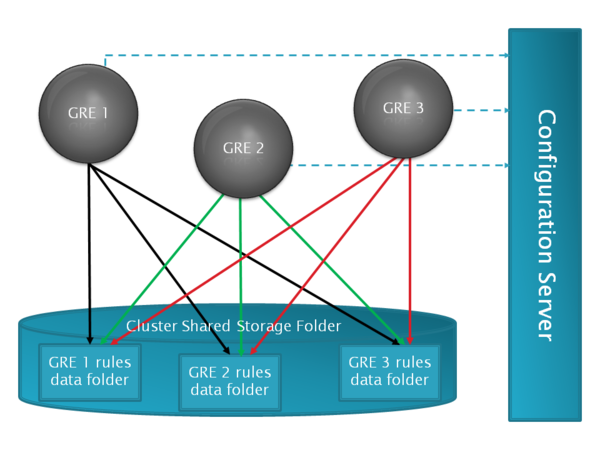

A new shared deployment folder from which rule packages can be synchronized can also be defined. When the cluster is configured to auto-synchronize, the GREs will auto-synchronize when newer rule packages are detected in the shared deployment folder. Auto-synchronization is enabled or disabled using configuration options in the GRE_Rules_Engine_Application_Cluster object in the Genesys configuration environment.

- Auto-synchronization of cluster nodes—Newly provisioned nodes in the cluster, or nodes that have disconnected and reconnected, can be auto-synchronized with other nodes in the cluster.

For a clustered GRE:

- Where the cluster has the new option auto-synch-rules set to true (new option), a cluster shared folder is now used to store rules package data. Each clustered GRE node has its own deployment folder in the cluster shared folder. The shared folder will enable synchronization of the cluster GREs after network or connection disruption or when a new GRE is added to the cluster.

- Where the cluster has the new option auto-synch-rules set to false (default), the deployed rules files will be stored in the location defined in deployed-rules-directory. In such cases a manual redeployment will be required if deployment status is partial or if a new node is joining the cluster.

[+] DIAGRAM auto-synch-rules=true

[+] CONFIG OPTIONS

- auto-synch-rules (set on cluster application)

- Default Value—false

- Valid Values—true, false

- Change Takes Effect—At GRE (re-)start

- Description—Set this to true to enable a GRE in cluster to start the periodic auto-synch and auto-deployment process. When this value is set to true, options shared-root-directory must be provided and deployed-rules-directory-is-relative-to-shared-root must be set to true for auto-synch to work.

- auto-synch-rules-interval (set on cluster application)

- Default value—5

- Valid Values—Integer

- Change Takes Effect—At GRE (re-)start

- Description—The interval in minutes between the end of the last synchronization check/auto deployment and the start of a new synchronization check.

- synch-rules-at-startup (set on cluster application)

- Default value—false

- Valid Values—true/false

- Change Takes Effect—At GRE (re-)start

- Description—Set this option to true to have the GREs synchronize and deploy rules at startup. It is ignored if auto-synch-rules is set to true (that is, when auto-synch-rules is true then auto-synch is always performed at startup.

This is useful if rules synchronization is required only at startup when auto-synch-rules is set to false.

- shared-root-directory

- Default value—No default

- Valid Values—String

- Change Takes Effect—Immediately

- Description—Specifies the shared root directory. When this option is used and option deployed-rules-directory-is-relative-to-shared-root is set to true, the effective deployed rules directory used by GRE is made by prepending this string to the path specified in deployed-rules-directory. It can be used to specify the mapped path to the shared location used for the auto-synch feature for rules. Having this option empty (or not set) effectively allows setting an absolute path in the deployed-rules-directory even when deployed-rules-directory-is-relative-to-shared-root is set to true. It may be a mapped folder backed by a service like Amazon S3 or simply an OS shared folder.

Examples:

- If shared-root-directory = C:\shared and deployed-rules-directory = \GRE1, then the effective deployed rules directory path used by GRE is C:\shared\GRE1 .

- If shared-root-directory = \\10.10.0.11\shared and deployed-rules-directory = \GRE1, then the effective deployed rules directory path used by GRE is \\10.10.0.11\shared\GRE1 .

- If the shared folder is mapped on drive Z, the shared-root-directory will be Z:, deployed-rules-directory may be \GRE1, then the effective deployed rules directory path used by GRE will be Z:\GRE1.

- deployed-rules-directory-is-relative-to-shared-root

- Default value—false

- Valid Values—true, false

- Change Takes Effect—Immediately

- Description—Indicates whether to use the shared root directory as the root directory for the deployed-rules-directory (true).

If GRE belongs to a cluster that has auto-synch-rules or just synch-rules-at-startup enabled then this option must be set to true so that GRE can participate in the auto-synch process. This can be used even when GRE does not belong to a cluster. If this option is set to false, auto-synch will not work.

[+] CONFIGURING SHARED FOLDERS

You must provide access to enable GRE to read and write to its own deployment folder and read data from the other node's deployment folders. If the GRE deployment folder does not exist, GRE will try to create it at start-up. When the new option deployed-rules-directory-is-relative-from-shared-root is enabled, the value provided for deployed-rules-directory is considered relative to the value of the shared root directory value.

For example, if the deployed-rules-directory-is-relative-from-shared-root option is true:

- If the value of the shared-root-directory option is /shared/cluster-A and the value of the deployed-rules-directory option is /foo/GRE_1 then GRE will try to use /shared/cluster-A/foo/GRE_1 as the deployed rules directory.

- If the value of the shared-root-directory option is "" (that is, empty or not set) and deployed-rules-directory option is /foo/GRE_1, then GRE will try to use /foo/GRE_1 as the deployed rules directory.

If required, for example in cloud deployments, Customer/Professional Services must make sure that the shared folder are set up in HA mode.

Folder Sharing Schema

Below is an example of how clustered GREs see other GRE node's deployed rules folders. In the example, below /sharedOnGre1, /sharedOnGre2 and /sharedOnGre3 all are pointing to the same shared folder, but the shared folder is mapped/mounted differently on each machine.

GRE1

shared-root-directory = /sharedOnGre1

deployed-rules-directory = /GRE1_DEPLOYDIR

GRE2

shared-root-directory = /sharedOnGre2

deployed-rules-directory = /GRE2_DEPLOYDIR

GRE3

shared-root-directory = /sharedOnGre3

deployed-rules-directory = /GRE3_DEPLOYDIR

GRE1 will see other GREs (GRE2 and GRE3) deployed rules folder by using paths as below:

GRE2 /sharedOnGre1/GRE2_DEPLOYDIR

GRE3 /sharedOnGre1/GRE3_DEPLOYDIR

GRE2 will see other GREs (GRE1 and GRE3) deployed rules folder by using paths as below:

GRE1 /sharedOnGre2/GRE1_DEPLOYDIR

GRE3 /sharedOnGre2/GRE3_DEPLOYDIR

GRE3 will see other GREs (GRE1 and GRE2) deployed rules folder by using paths as below:

GRE1 /sharedOnGre3/GRE1_DEPLOYDIR

GRE2 /sharedOnGre3/GRE2_DEPLOYDIR

[+] CONFIGURATION STEPS

To set up the auto-synchronization feature, do the following:

- Shut down the clustered GREs (which must have been created using the GRE_Rules_Engine_Cluster application template).

- Set the following configuration options:

- On the GRAT Application object, set allow-partial-cluster-deployment to true.

- On the new cluster Application object, set auto-synch-rules to true. You can optionally set auto-synch-rules-interval if you require a value that is different value from the default. You can also optionally set synch-rules-at-startup—this is useful only when auto-synch-rules is set to false.

- On each GRE in the cluster, set deployed-rules-directory-is-relative-to-shared-root to true. Set the value of shared-root-directory per the description above.

- Make sure that in each GRE, the concatenated path (the effective deployed rules directory path) shared-root-directory PLUS deployed-rules-directory makes a valid directory path. If the directory does not already exist, it will be created at GRE start-up. See Cluster Shared Storage Folder for more details.

- Ensure that the the clustered GREs have appropriate access rights to create/read files or folders and start them.

- If you are migrating from a pre-8.5.2 release, re-deploy each rule package in order for auto-synchronization to work. See Configuration Notes below for details.

Configuration Notes

If GRAT’s CME Application ID is replaced (such as in the scenario in Important below), you must do one of the following for auto-synchronization to work correctly. Either:

- Redeploy all the rule packages to the cluster; or;

- Update the configuration—this may be preferable to redeploying all rule packages (for example, because of a large number of rule packages)

Important

Changing of GRAT’s configuration Application ID will occur when you have a previous configuration using GRAT 8.5.1 with deployed rule packages and you upgrade to GRAT 8.5.2, and as part of that, create new application objects in CME for GRAT 8.5.2.

Redeploy all the rule packages to the cluster

If auto-synchronization is enabled and deployment to the cluster cannot be performed, follow the steps below to deploy to the GREs individually:

- Temporarily disable auto-synchronization in the GREs by setting option deployed-rules-directory-is-relative-to-shared-root to false.

- Redeploy all the rule packages to the GREs.

- Once the rule packages have been deployed to all the GREs, reset deployed-rules-directory-is-relative-to-shared-root to true.

If auto-synchronization is disabled and deployment to the cluster cannot be performed, the rule packages can be deployed to all the GREs individually without requiring any additional settings.

Update the configuration

In the Tenant configuration, update option next-id, which is available under the Annex settings section in a Script Schedule-XXXX ( where XXXX is GRAT’s configuration Application ID) corresponding to the new GRAT Application, with the value from script corresponding to the previous GRAT Application.

Option path in Configuration Manager:

Configuration > [Tenant Name] > Scripts > Rule Deployment History > Schedule-[Id of GRAT App] > Annex > settings > “next-id”

Option path in Genesys Administrator:

PROVISIONING > [Tenant Name] > Scripts > Rule Deployment History > Schedule-[Id of GRAT App] > Options (with Advanced View (Annex)) >

settings > “next-id”

Example

If the Tenant name is Environment, the new GRAT configuration Application ID is 456 and the old GRAT configuration Application ID is 123.

Using Configuration Manager:

Copy the value of option:

Configuration > Environment > Scripts > Rule Deployment History > Schedule-123 > Annex > settings > next-id

into:

Configuration > Environment > Scripts > Rule Deployment History > Schedule-456 > Annex > settings > next-id

Using Genesys Administrator:

Copy the value of option:

Configuration > Environment > Scripts > Rule Deployment History > Schedule-123 > Options (with Advanced View (Annex)) > settings > next-id

into:

Configuration > Environment > Scripts > Rule Deployment History > Schedule-456 > Options (with Advanced View (Annex)) > settings > next-id

Limitations in the Initial 8.5.2 Release

- The auto-synchronization feature does not include undeploy functionality.

- A GRE cannot be a member of more than one cluster. This is because GRE checks all the clusters in the Genesys configuration environment to see which one has a connection to the GRE. If there are multiple such clusters, only the first one found is considered; any others are ignored.

- GRE can operate either singly or as part of a "smart cluster", but not both.

- High Availability (HA) for the cluster shared folder is not currently implemented. If HA is required, for example in multi-site deployments, Professional Services must make sure that the shared folder is set up in HA mode.

Support for WebSphere Clustering for Cloud

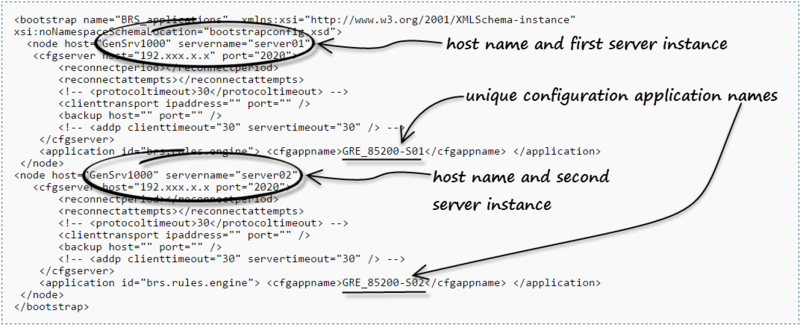

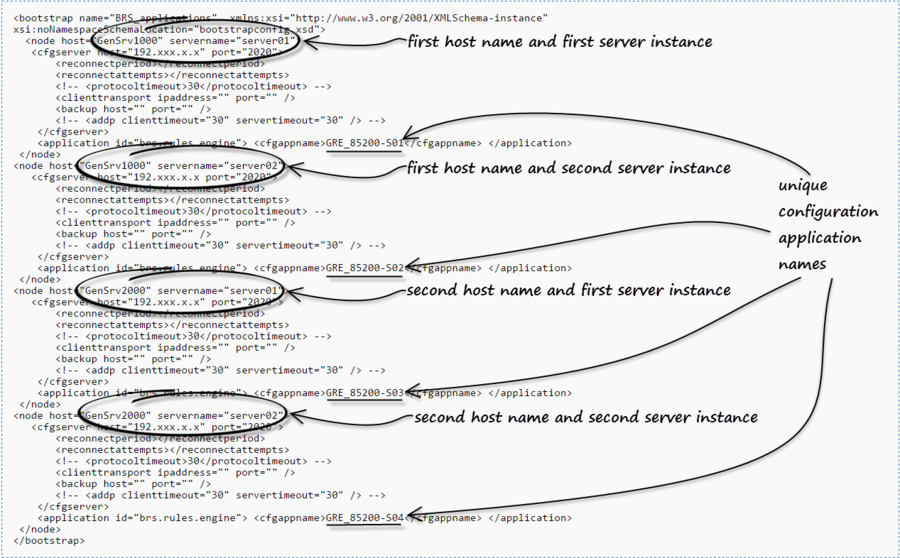

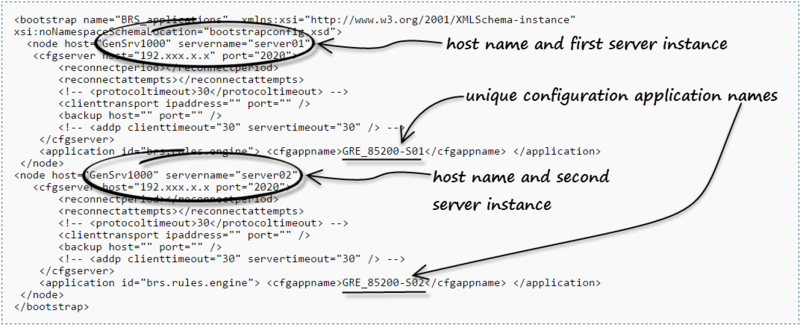

In this release, it is now possible to define multiple nodes for the same host by using an additional attribute called servername in the node definition.

[+] MORE

Background

Before release 8.5.2 of GRS, it was not possible to configure multiple cluster nodes running on the same machine and controlled by the same cluster manager because separate entries for the same host could not be created in bootstrapconfig.xml to represent different GRE nodes. The pre-8.5.2 format of the bootstrapconfig.xml allowed for a single node to be defined per host. The xml format was as follows:

<xs:complexType name="node">

<xs:sequence>

<xs:element name="cfgserver" type="cfgserver" minOccurs="1" maxOccurs="1"/>

<xs:element name="lcaserver" type="lcaserver" minOccurs="0" maxOccurs="1"/>

<xs:element name="application" type="application" minOccurs="1" maxOccurs="unbounded"/>

</xs:sequence>

<xs:attribute name="host" type="xs:string"/>

<xs:attribute name="ipaddress" type="xs:string"/>

</xs:complexType>

What's New?

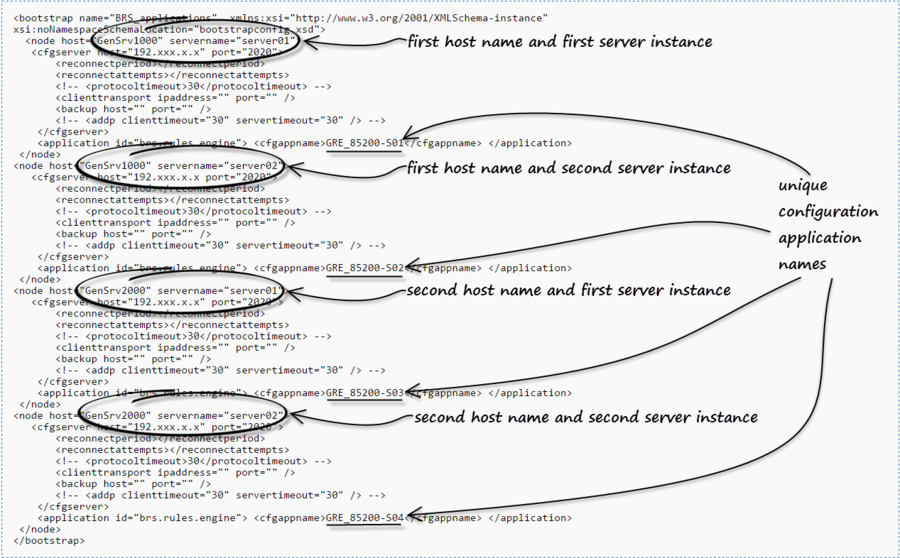

In GRS 8.5.2, an additional attribute called servername has been added to the node definition. This makes it possible to define multiple nodes for the same host. The server name is defined via the WebSphere Application Server (WAS) Deployment Manager when the cluster node is created.

For example, you can replicate the “node” definition for each GRE that is running on the same host. Then, by adding servername=, you can make the entry unique. Each entry then points to the corresponding Configuration Server application for that GRE instance. In this way, a single bootstrapconfig.xml file can be used to define all nodes in the Websphere cluster, whether or not there are multiple GRE nodes defined on a given host.

To ensure backward compatibility, if no node is found within the bootstrapconfig.xml that matches both the hostname and serverName then the node that contains the hostname with no server name defined serves as the default.

Editing the bootstrapconfig.xml file

To edit this file, manually extract the bootstrapconfig.xml file from the .war file, edit and save the bootstrapconfig.xml file, then repackage the bootstrapconfig.xml file back into the .war file.

Sample bootstrapconfig.xml files

Important

Terminology—In the

bootstrapconfig.xml files, the

<node> element corresponds to an individual member of a WebSphere cluster.

For a cluster with one host and two server instances on that host

Below is a sample bootsrapconfig.xml definition for a GRE cluster running on one host, GenSrv1000, with server instances server01 and server02 on that host:

For a cluster with two hosts and two server instances on each host

Below is a sample bootsrapconfig.xml definition for a GRE cluster running on two hosts, GenSrv1000 and GenSrv2000, with server instances server01 and server02 on each host:

See also an additional WebSphere configuration change required for auto-synchronization to work.

Support for Safari 8.x

Release 8.5.2 supports the Safari 8.x browser.

Enable/Disable Business Calendars

Genesys Web Engagement did not originally support Business Calendars in its Complex Event Processing (CEP) engine. However, support is being added in release 8.5.0. Use the new GRAT enable-cep-calendars configuration option to enable or disable business calendars for rules that are based on a CEP template.

CONFIGURATION OPTION

New in 8.5.1

New Features in 8.5.1 (new document)

New in 8.5.0

New Features in 8.5.0 (new document)